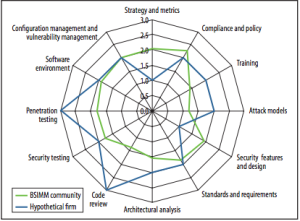

An Application Security Assessment is started by examining the security practices of a solution in its SDLC ( Software Development Lifecycle ). The key areas are examined are broken down into 4 domains of Governance, Intelligence, SSDL Touch-points, and Deployment. In those domains Strategy & Metrics, Compliance & Policy, Training, Attack Models, Security Features & Design, Standards & Requirements, Architecture Analysis, Code Review, Security Testing, Penetration Testing, Software Environment, and Configuration Management & Vulnerability Management are examined.

Specifically a program is examined to ensure that there are at least Security minimums in key areas that are in place (these are my view on minimums): Training, Architectural Analysis, Code Review, Penetration Testing, and Privacy. These are the standard starting point for any assessment in Application Security.

Within the areas listed above they are looked at to understand the maturity of each. It is important to look at how a security activity is offered, performed, and any external effect it has on the lifecycle. No security practice is an island unto itself. They all work holistically in a SDLC lifecycle eco-system.

An overview into the core security practices and maturity:

Training maturity- Software Security training and awareness promote a culture of software security throughout the organization.

- Level 1 – Does the organization make customized, role-based training available to their employees?

- Level 2 – Does the organization create satellite groups within development teams that promote security practices?

- Level 3 – Is the security culture promoted externally with vendors and outsourced contractors? Is recognition given and advancement provided in the training curriculum?

Architectural Analysis maturity – Perform a security feature review and get started with Architectural Analysis.

- Level 1 – Are risk driven architectural reviews done? Does the organization provide a lightweight risk classification?

- Level 2 – Is there an architectural analysis process based on common architectural descriptions and attack models?

- Level 3 – Do software architects lead efforts across the organization to lead analysis efforts and have standard secure architectural patterns they use and provide?

Code Review maturity – Use manual code analysis review along-side automation. Use automated tools to drive efficiency and consistency .

- Level 1 – Is manual or automated code review being done with centralized reporting? Is code review mandatory for all software projects? Are findings folded back into strategy and training?

- Level 2 – Do automated tools and tool mentors enforce coding standard behaviors in development teams?

- Level 3 – Has an automated code review factory been built to find bugs in the entire code-base?

Penetration Testing maturity – Use Penetration Testers to find problems.

- Level 1 – Are internal or external penetration testers being used? Are the deficiencies being discovered and addressed? Is everyone is being made aware of progress?

- Level 2 – Are periodic penetration tests being performed for all applications?

- Level 3 – Is penetration testing knowledge being kept in pace with attack advances of attackers?

Privacy – Identify PII obligations and promote privacy.

- Level 1 – Are statutory, regulatory, and contractual compliance drivers understood and available to all lifecycle stakeholders?

- Level 2 – Do SLAs address the software security properties of vendor software deliverables? Is this backed by executive support? Do risk managers take responsibility for software risk?

- Level 3 – Does data gathered from attacks, threats, defects, and operational issues drive policy? Are policies evolving? Do the demands upon the vendors change because of this?

Outside the core security practices other security practices that are examined are one’s that are common across the Industry.

Security & Metrics – What SDLC is being used and what gates are enforced?

- Level 1 – Does everyone who is involved with the software lifecycle understand the written organization security objectives? Is there demonstrated support from executive level on these efforts?

- Level 2 – Are there individuals that are responsible for the successful performance of secure lifecycle activities? Are activities that lead to unacceptable risk removed and replaced?

- Level 3 – Is risk-based portfolio being managed?

Attack Models -Create a data classification scheme and inventory. Prioritize applications by data consumed and data manipulated.

- Level 1 – Is there a knowledge-base built up around attacks and attack data? This includes attacks that have already occurred and attacks that are of concern. Is there a data classification scheme that is used to inventory and prioritize applications?

- Level 2 – Does a security team offer assistance on attackers and relevant attacks?

- Level 3 – Is attack research being done? Is this knowledge being provided to auditors?

Security Features & Design – Build and track a common library of security features for re-use.

- Level 1 – Are architects and developers being provided guidance around security features? Are security features and secure architecture published?

- Level 2 – Are secure-by-design frameworks being provided to lifecycle teams?

- Level 3 -Are defined security features being used across the organization? Do teams understand design choices?

Standards & Requirements – Create security standards.

- Level 1 – Is security being kept up-to-date and made available to everyone in the organization? Are these easily accessible. Artifacts included as a minimum are: security standards, coding standards, and compliance requirements.

- Level 2 – Are formally approved standards communicated internally and to vendors? Are SLAs being enforced? Is usage of open source software understood?

- Level 3 – Is open source software being held to the same standard as the organization?

Security Testing – Drive tests with security requirements and security features.

- Level 1 – Does QA perform functional security testing?

- Level 2 – Has QA included black-box testing tools in their processes?

- Level 3 – Does QA include security testing in an automated regression suites? Does security testing follow an attackers perspective?

Software Environment – Host and network security basics are in place.

- Level 1 – Operation group ensures that required security controls are in place and the integrity of these controls are kept in tacked? Is monitoring used that includes application input?

- Level 2 – Are application installation and maintenance guides created for operations teams? Is code signing being used?

- Level 3 – Is client-side code protected when leaving the organization? Is software behavior being monitored?

Configuration Management & Vulnerability Management – Use Operations data to change development behavior.

- Level 1 – Do results from CM and VM drive development behavior? Is there an Incident Response program in place?

- Level 2 – Is there emergency response available during application attacks?

- Level 3 – Is there a tight response loop between operations and development of deficiencies found in ops and are enhancements made in the application that eliminate root-cause?